How MCP Uses Tokens & Does Performance Degrade as Tools Increase?

How MCP Uses Tokens and What Happens When Tools Increase

While testing MCP myself, I noticed something interesting: the model wasn’t slowing down because MCP was heavy, but because of how much information the model ended up seeing. The more tools I added, the more tokens the model used—not because MCP consumes tokens, but because the model had to read more context to decide which tool to call.

How MCP Actually Uses Tokens

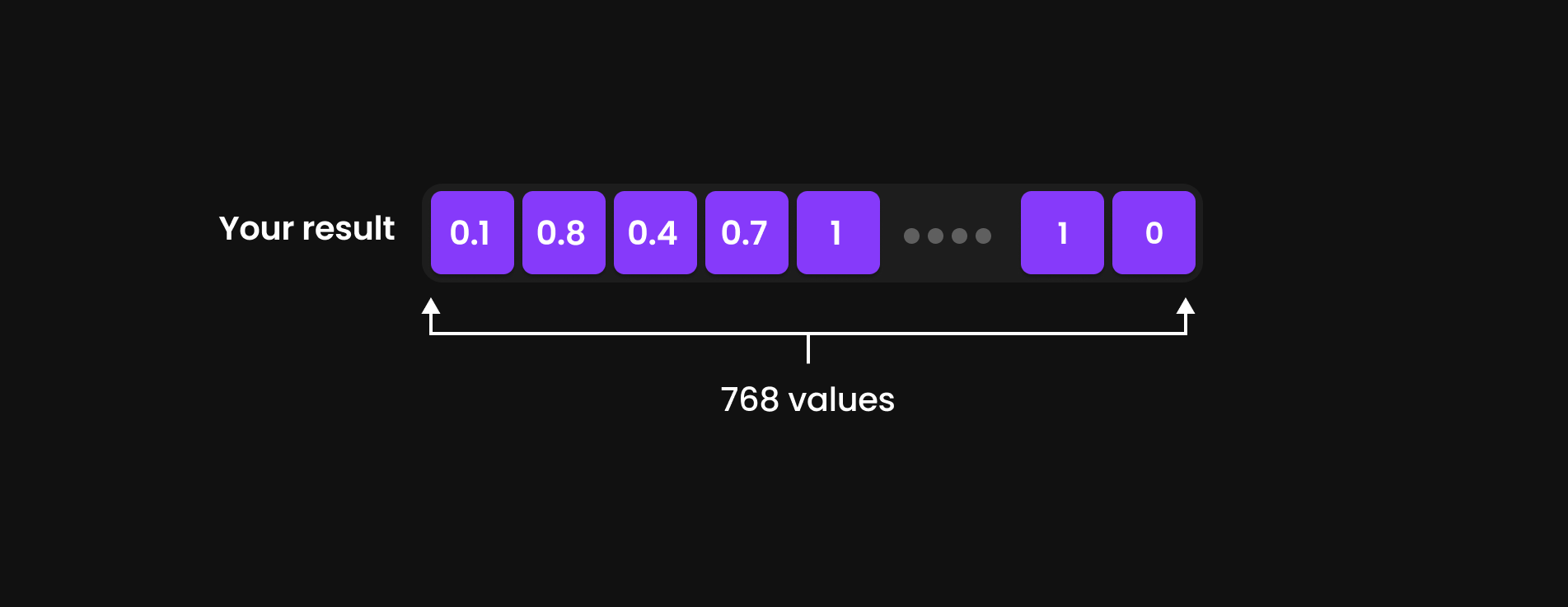

MCP does not directly consume tokens. The model only spends tokens when it reads something:

- Tool descriptions

- Tool responses

- Its own reasoning around those responses

In my tests, adding tools didn’t cost anything until the model needed to evaluate them. Tools sitting unused on a server cost zero tokens.

When Adding Tools Starts Hurting Performance

I began noticing performance drops when I added many tools with similar names or large schemas. The model had to think harder about which tool to call, and that extra reasoning showed up as token usage.

Large tool responses had the same effect. Even when the logic ran fast on the server, the model spent a lot of tokens reading the output.

What Keeps MCP Fast

Here are the patterns that consistently kept performance stable during my testing:

- Clear, distinct tool names

- Small and focused responses

- Breaking complex functionality into multiple simple tools

- Loading only the tools needed for a specific task

Final Thoughts

After working with MCP directly, it became clear that the protocol itself is lightweight. The real cost comes from how much the model must read and reason about. Good tool design keeps token usage low, even as your toolset grows.